Since the IP geographic data operates on ranges of IP addresses, we cannot simply join the log data to the IP data (using RDBMS or MapReduce), and we cannot use a simple key/value cache to look-up the IP data. Currently the entire IP2Location DB-5 data set consumes 1+ GB of memory when loaded into a Java object array. While we can currently fit this in memory with the current infrastructure we will have issues if this data grows significantly beyond it’s current size. Therefore we have to look at some alternatives for looking up the data without loading it all in memory. We cannot use a middleware or shared database solution since these will quickly become overwhelmed by requests from our cluster. Likewise, we cannot afford to take a network hit for every look-up and we currently cannot batch look-up requests to reduce network hits. We need a shared-nothing architecture, and therefore copying a Java embedded database locally to pull our small data close to our big data seems the best approach. This page evaluates several Java embedded databases as a fit for the use case.

This test is Hadoop/Cascading specific in that it uses the standard set of jar files included in the class path of Apache Hadoop 20.2 and Cascading 1.2. For example, we used the hsqldb-1.8.0.10.jar bundled with Hadoop. Leveraging an embedded DB which is not only thread safe but also concurrent will allow re-use of in-process memory cache across multiple threads using the Hadoop Task JVM Reuse feature.

Databases Evaluated

The following databases were evaluated:

- Baseline load into in-memory array.

- Apache Derby 10.7.1.1

- HSQLDB 1.8.0.10

- H2 1.2.147

Each of the databases were set up, configured, and queried using identical SQL scripts and JDBC code, except where specifically noted in the test results section. Also, we require the exclusive use of a JDBC interface to the database so we can easily swap DBs based on performance and stability.

Test Results

Test results for the in-memory baseline and each of the embedded DBs follows:

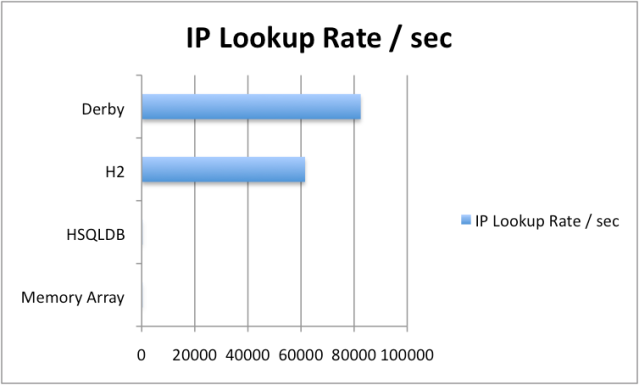

| DB | Load Time | Load Rows | Load Rate | IP Lookup Rate |

|---|---|---|---|---|

| Derby | 1 min, 51 sec | 3,237,642 | 29168 / sec | 82486 / sec **** |

| H2 | 1 min, 10 sec | 3,237,642 | 46252 / sec *** | 61527 / sec |

| in-memory array | 32 sec | 3,237,642 | 101176 / sec | 133 / sec |

| HSQLDB | 3 min, 28 sec | 3,237,642 | 15565 / sec * | 1 / 22 sec ** |

* = -Xmx3G JVM setting was required to load the data

** = -Xmx1G JVM setting was required to query the data

*** = -Xmx1G JVM setting was required to load the data

**** = Only Derby supported concurrent thread access, and 4 threads were used in the IP Lookup test

Test Cases

Create Table

The table was created with the following columns as:

CREATE TABLE IP_INFO ( IP_FROM BIGINT, IP_TO BIGINT, COUNTRY_CODE VARCHAR(2), COUNTRY_NAME VARCHAR(64), REGION VARCHAR(64), CITY VARCHAR(64), LATITUDE DOUBLE, LONGITUDE DOUBLE )

Load Data

The following load scripts where used to load up to the maximum 3,237,642 rows. Data set based on IP2Location DB-5. Some of the Java database tested could not adequately handle the data size so for those databases a smaller number of rows were loaded, as noted in the test results section:

INSERT INTO IP_INFO ( IP_FROM, IP_TO, COUNTRY_CODE, COUNTRY_NAME, REGION, CITY, LATITUDE, LONGITUDE ) VALUES ( ?, ?, ?, ?, ?, ?, ?, ? )

In addition, the following JDBC code to load the data utilizes prepared statements and JDBC batch processing:

Connection conn = DriverManager.getConnection(<db url>);

PreparedStatement ps = conn.prepareStatement(insertString);

// read data file and get fields for each line ...

// for each field, bind values to the PreparedStatement

ps.setLong(1, g.getIpFrom());

ps.addBatch();

// add batch for every line, and execute batch every 100 lines

if(lineNumber % 100 == 0) {

ps.executeBatch();

}

// at the end, execute final batch and commit ...

ps.executeBatch();

conn.commit();

Create Index

A b-tree index on IP_TO is required to select the matching IP range:

CREATE INDEX IP_INFO_I01 ON IP_INFO ( IP_TO )

Select IP Number in Range

The first row matching the following SELECT query matches the geographic information for an IP address range. Any additional rows are not read and are ignored:

SELECT * FROM IP_INFO WHERE IP_TO >= ? ORDER BY IP_TO

The following JDBC code utilizes prepared statements and bind variables to select the first matching row:

PreparedStatement ps = conn.prepareStatement(<sql>);

ps.setFetchSize(1);

ps.setMaxRows(1);

// the following code iterates for each look up test execution

long random = (long)(Math.random() * Integer.MAX_VALUE);

ps.setLong(1, random);

ResultSet rs = ps.executeQuery();

if(rs.next()) {

// test getting fields, and verify result

}

Test System Configuration

Test was run on the following platform configuration:

Hardware Overview:

Model Name: MacBook Pro

Model Identifier: MacBookPro6,2

Processor Name: Intel Core i7

Processor Speed: 2.66 GHz

Number Of Processors: 1

Total Number Of Cores: 2

L2 Cache (per core): 256 KB

L3 Cache: 4 MB

Memory: 4 GB

Processor Interconnect Speed: 4.8 GT/s

Boot ROM Version: MBP61.0057.B0C

Serial-ATA:

Intel 5 Series Chipset:

Vendor: Intel

Product: 5 Series Chipset

Link Speed: 3 Gigabit

Negotiated Link Speed: 1.5 Gigabit

Description: AHCI Version 1.30 Supported

Disk:

Hitachi HTS545050B9SA02:

Capacity: 500.11 GB (500,107,862,016 bytes)

Model: Hitachi HTS545050B9SA02

Revision: PB4AC60W

System Software Overview:

System Version: Mac OS X 10.6.5 (10H574)

Kernel Version: Darwin 10.5.0

JVM Overview:

java version "1.6.0_22"

Java(TM) SE Runtime Environment (build 1.6.0_22-b04-307-10M3261)

Java HotSpot(TM) 64-Bit Server VM (build 17.1-b03-307, mixed mode)

Modifications to Tests

Some tests had to be modified, either due to time constraints (test could not run in a reasonable time) or because the test crashed or hung.

In Memory Array

We had to run the test with JVM parameter -Xmx2G to ensure all the data could be loaded into memory. Since we are not using a DB, the query logic is simply to iterate through the array to find the first row with IP_TO >= target IP.

Please note: we cannot use a binary search algorithm since we do not know the specific value we are searching for a priori. We considered writing a binary search variant to find the first element >= the target IP, however we could not find a library which does this and we don’t have the time to write and test one ourselves.

Derby

We had to set the block cache size above the default to improve cache hits for multi-threaded access:

System.getProperties().setProperty("derby.storage.pageCacheSize", "524288");

We set the JVM -xmx1G for the IP Lookups and used the JVM default 128M for the data load.

HSQLDB

We had to make many modifications to the code, the DML, and SQL as well as the JVM memory settings to make the data load work. We could not find a way to force HSql to use the b-tree index so the query performance represents the default plan selected by HSQLDB.

Here is a description of the changes and why they were made:

JVM Memory Settings

We started out with the 128M default JVM heap and received an out of memory error before we completely loaded 200,000 rows. We had to conclude that HSql only saves to disk after a commit or checkpoint, and keeps the entire set of data buffered in memory. This was confirmed when we added a CHECKPOINT after each 100,000 rows and still got the same “java.lang.OutOfMemoryError: Java heap space”, but we did see data on disk after each checkpoint.

So we had to set memory to -Xmx3G to load the data set, and we had to take the CHECKPOINT out because it looks like HSql writes all the data to one big file. So every time you do a CHECKPOINT the overhead becomes larger and larger to write the data. It is also possible it’s writing the entire file with every checkpoint as well. Definitely never use CHECKPOINT or incremental commits when writing data set on GB+ sizes, and make sure your available memory is 3 times the size of the data on disk after the load.

When querying the data, again we have the problem of HSql loading the entire data set into memory. I.e. we could not query the data at all with the default 128MB of JVM memory allocated. Setting -Xmx1G works around this problem.

Code and SQL Changes

We could not create the index due to this error:

java.sql.SQLException: java.io.IOException: S1000 Data file size limit is reached in statement [CREATE INDEX IP_INFO_I01 ON IP_INFO(IP_TO)]

We tried to load only 100,000 rows to see if the index is actually used but the best query rate we could get was 17 / sec so if the index was used the performance definitely would not scale to 320 times that data size.

We had to specify CREATE CACHED TABLE in the table DDL to get the data load to work.

H2

H2 also required changes to the JVM settings to work properly.

JVM Memory Settings

With the default 128M JVM settings we were able to reach just over 400,000 rows loaded before running out of heap space. Setting the heap to -Xmx1G fixed this issue. Similarly to HSQLDB it looks like H2 loads the entire data set into memory for the transaction.

We had to specify CREATE CACHED TABLE in the table DDL to get the data load to work.

Conclusion

Derby provides the best fit for this use case due to:

- Concurrency: multiple threads can query embedded Derby within the same JVM process.

- The performance of Derby in multi-threaded mode outstrips H2’s single threaded performance on a 2 core machine. We expect this to scale linearly to our target 16 core production deployment.

- Sharing process memory across multiple threads allows us to scale look up data size while maximizing shared cache hits.

However, H2 demonstrated superior single-threaded performance and admirable load speeds and used 40% less space on-disk than Derby.

All-in-all Derby wins for this use case, and H2 gets admirable mention.

Hi,

I noticed you use ps.setFetchSize(1). To limit the number of returned rows, you need to use ps.setMaxRows(1), and you should also change the query slightly because to guarantee that the row with the lowest IP_TO is returned:

SELECT * FROM IP_INFO WHERE IP_TO >= ? ORDER BY IP_TO

As for the memory usage during import, I could reproduce the out of memory problem for HSQLDB. The reason is, HSQLDB keeps all tables fully in memory by default. You can disable this using an option in the database URL: jdbc:hsqldb:data/test;hsqldb.default_table_type=cached – I couldn’t reproduce the out of memory error for the H2 database however, I’m not sure if you are using an older version of H2.

One tip to speed up the import is to commit every 100 or so rows:

if(lineNumber % 100 == 0) {

ps.executeBatch();

conn.commit(); // <= speed up

}

The last tip I have is to replace the index with a primary key on this column:

CREATE TABLE IP_INFO (

IP_FROM BIGINT,

IP_TO BIGINT PRIMARY KEY,

…

)

In case you need even more speed: I believe it's possible to reduce the memory usage if you normalize the data. As an example, don't store the country name for every row; store a country number instead and keep the distinct country names in a separate table / array. The same for region and maybe city (depending on how many distinct entries are there).

Yes, I’ll have to run through it again using these suggestions when I have time. However I believe that adding a criteria will be more optimal than adding ORDER BY. i.e.:

SELECT * FROM IP_INFO WHERE IP_TO >= ? AND IP_FROM <= ?

Then adding the IP_FROM column to the index should do the trick. (both parameters being IP Number of the incoming address)

I have test cases with millions of IP look ups using the original query that always select the correct row on Derby without the added criteria or the ORDER BY. However, to make this strictly compatible with databases supporting parallel query (such as Oracle) you are right, I need to add the additional criteria or ORDER BY to guarantee consistent results… I am relying right now on the nature of the b-tree

I find your use case cool and I made another test using the free GeoLiteCity database (http://www.maxmind.com/app/geolitecity). The following script only works for the H2 database; you can copy & paste it into the H2 Console tool. On my machine, the H2 database can process 245’000 queries per second. The database file size is about 300 MB, with 3650754 rows in the blocks and 299140 rows in the location table. Loading the database from the CSV files takes about 37 seconds.

drop all objects;

create table location(id int primary key, country varchar,

region varchar, city varchar, postalCode varchar, latitude float,

longitude float, metroCode varchar, areaCode varchar)

as select * from

csvread(‘~/Downloads/GeoLiteCity/GeoLiteCity-Location.csv’);

create table blocks(start long, end long primary key, location int)

as select * from

csvread(‘~/Downloads/GeoLiteCity/GeoLiteCity-Blocks.csv’);

create alias ip2id deterministic as $$

long ip2id(String s) {

String[] x = s.split(“\\.”);

return (Long.parseLong(x[0]) << 24) + (Long.parseLong(x[1]) << 16) +

(Long.parseLong(x[2]) <> 24) + “.” + ((x >> 16) & 255) + “.” +

((x >> 8) & 255) + “.” + (x & 255);

} $$;

select id2ip(start), id2ip(end), country, region, city

from blocks b inner join location l on b.location = l.id

where b.end >= ip2id(‘213.221.238.52’) order by b.end limit 1;

@loop 1000000 select country, region, city

from blocks b inner join location l on b.location = l.id

where b.end >= ? order by b.end limit 1;

— 4076 ms, or about 245000 op/s

You can also load the database fully in memory using the special compressed in-memory file system of H2, using the database URL jdbc:h2:memLZF:test. I tested with “java -Xmx256m” (it only actually uses about 190 MB). In that case querying is a bit slower however (because the on-the-fly compression). If you use the in-memory file system without compression (jdbc:h2:memFS:test) it will need more memory (about 350 MB in theory; I didn’t test). Even more memory is required if you use the regular in-memory database (jdbc:h2:mem:test), but querying is much faster then.

The formatting of the Java functions above is broken, it’s better on StackOverflow.

@Thomas: OK I reran the tests with ORDER BY and setMaxRows(1). I also integrated some comments I received on derby-users about how to use multi-threading in embedded Derby.

I was not able to implement the suggestion you had regarding using the command line tools in H2. We are restricted to a JDBC interface so we can swap DB implementations more easily. We basically need a DAO that exposes the IP Lookup function via Hadoop job and/or a Cascading Function.

We also need to scale the lookup data size so using shared cache across multiple threads is a big plus. So even though H2 has faster single-threaded performance the concurrent thread support in Derby makes it a better choice for our use case. At least until H2 implements multi-threading in embedded mode…

Cheers, Matt

> restricted to a JDBC interface

Sure, it’s a good idea to be database independent.

> concurrent thread support

I understand. H2 already supports multi-threaded access, but for your use case it may not be enough. I know H2 is still relatively weak in this area, it will be improved this year.

I guess you already considered keeping everything in memory. According to my test, all the data should fit in about 64 MB. I used a compact in-memory representation: int arrays for the start address, end address, and location. Then I used a byte[][] for the locations (you could use a String array, but that would use more memory). See my test case. Currently it uses 55 MB. Querying the data should be quite easy (use java.util.Arrays.binarySearch to get the location, and then parse the byte array at the given location).

Yes, all data in memory with H2 would have to be replicated for each JVM so that would be prohibitive in our case. It is better to share one embedded DB across multiple threads in the same JVM, since we have a lot of other processes contenting for memory on the boxes.

Yes, the multi-threading in H2 is thread safe but the concurrent access is not supported yet. Actually when that’s in place we can switch to H2 simply by changing the DAO implementation class!

@Thomas – I was thinking the same thing for the test case. Your approach breaks b/c the simple conversion of IP address to (int) fails in Java. Signed ints cannot hold the full IP address range. Need to either use long or fake unsigned int.

Hi,

> the simple conversion of IP address to (int) fails in Java.

Converting an IPv4 IP address String to a Java signed int and back to a String doesn’t lose any information. However if you compare the signed int with another signed int then it will not work correctly. When using a database, I guess you should use the BIGINT data type. When using Java, convert the signed int to a long before comparing.